Artificial Intelligence: Mathematical Imitator or Genuine Thinker?

Artificial intelligence is said to revolutionize the world and even threaten the entire human race. Another group of experts consider the doomsday scenarios to be exaggerated and unfounded. The truth lies somewhere in between. There are already areas where AI outperforms humans as well as areas where humans are superior – at least for now.

Half a century ago, Alan Turing and Michael Polanyi debated the differences between machines and human thinking, making genuine scientific history. Already at that time, they concluded that computers were superior to humans in various rule-based computational tasks. However, as Polanyi and many others pointed out, human thinking is not just about performing rules, but a much broader phenomenon.

Is imitation intelligence?

Turing argued that in the future, machines capable of learning would be built, with control mechanisms that incorporate mathematical mutations and feedback mechanisms, allowing their operation to resemble human learning. They learn things independently so that their operation is no longer based on rules predetermined by humans. They are no longer calculators that can be commanded, but they have a much greater ability to learn regularities from their observations and act autonomously.

Turing’s ideas were criticized by experts like Polanyi: human thinking is not just imitation. Polanyi, on the other hand, emphasized that imitation is a very small part of human thinking ability. More broadly, critics believe that essential aspects of human thinking, such as consciousness, intentionality, creativity, and questioning, are not yet understood. These cannot be reduced to a world of complex rules, and these are missing from machines. The famous Polanyi paradox ”we know more than we can tell” aims to describe this kind of holistic human thinking and behaviour. He also coined the term ”tacit knowledge” to refer to the expertise and understanding that is learned and experienced in practice and cannot be expressed with symbols.

Turing found many of the objections too vague to have a meaningful conclusion, as it was not possible to measure genuine creativity or consciousness. Even today, it is impossible to determine what true consciousness or genuine creativity is. Turing believed that measuring machine’s ability to imitate human would make measuring genuine consciousness or creativity unnecessary. He claimed that machines could learn to imitate humans so well that humans would not be able to distinguish them from humans. This ability could be measured scientifically and for this purpose he introduced the famous “Turing Test”.

However, both thinkers agreed that machines think differently than humans. Their perspectives were very different, although equally important. When discussing artificial intelligence, one must understand the fundamental differences between these two perspectives – whether we are talking about blind imitation or creative thinking.

Challenges of Artificial Intelligence Learning

For a long time, we have been hearing marketing speeches about artificial intelligence (AI), but when examined, it has been found that the alleged achievements of AI may not necessarily be true. During the time of the original presentation, there was less talk about the challenges limiting AIs potential for exploitation and dangers in its usage, and even then, mainly within the scientific and development communities. In recent times, these concerns have become more widespread among the general public as well.

Nowadays, it is widely known that AI can statistically outperform human capabilities in increasingly demanding tasks and in more subject areas. However, it has been found that AI can also exaggerate biases, be susceptible to cheating, and contain hidden errors.

For example, AI learns efficiently from the data it is provided, but it also unconsciously learns the bad things hidden in the data being taught. It might blindly repeat the one-sided coincidences of the data as well as the undesirable sides of human behaviour reflected in the data. This so-called bias can practically manifest itself, for example, in AI-based diagnostics that recommends worse treatment to dark-skinned people, or in AI-assisted recruitment that screens job applications and does not hire women for jobs because this is how it has been done also in the past.

Artificial intelligence can also be manipulated by skilled individuals, and even minor factors that are irrelevant to humans can disturb AI’s reasoning process. This phenomenon has been explored in numerous studies. For instance, AI has been tricked into identifying 3D turtles as rifles by altering their surface patterns [1]. Additionally, AI has been tricked to recognize animal species from disordered, colorful mixtures that a human eye would find incomprehensible.

These experiments reveal that manipulations that are invisible to humans may pose a significant risk, particularly if they are employed against humanity or society on a larger scale. For example, invisible patterns on traffic signs, roads or buildings could be used to manipulate hidden weaknesses of AI systems and cause massive catastrophes. Alternatively, the patterns could be used to hide true nature of the objects to cause any kind of accidents or help in smuggling items. An AI system that has been deployed across the world, would have the same weakness everywhere and that could make the system a massive societal single-point-of-failure.

Black-boxed AIs contain also latent features whose consequences for the chains of reasoning are unpredictable. For example, Uber’s automatic control decided to drive through a pedestrian even though its sensors recognized the obstacle as a human. Why, it was not known for a long time, but the later analyses concluded that several situational characteristics made AIs reasoning to fluctuate between the options leading to inability to act in a time [2]. As the AI changed the classification of the pedestrian several times—alternating between vehicle, bicycle, and another— the system was unable to correctly predict the path of the detected object leading to a death of a person.

Today, we have a vast amount of evidence how AI-based systems fail due to bias, manipulation or unexpected anomalies. Unfortunately, due to black-box problem, we have reached a point where also ”AI knows more than it can tell” (cf. Polanyi’s paradox). We do not have the ability to identify the critical hidden errors it contains in advance, nor can we determine the structures behind the decisions afterwards. Due to these challenges, AI can be an extremely effective solving specific problems in closed system environments, but it often acts unpredictably in uncontrollable open systems.

The weaknesses are wicked problems without clear solutions

The weaknesses of AI, such as AI’s tendency to enforce existing biases, being vulnerable to cheating, and containing latent errors, present wicked problems with no clear solutions. These problems exist for reasons that are inherent part of logical reasoning and are driven by pragmatic motives.

For example, the pragmatic dilemma arises when we prioritize politically correct but empirically less prevalent training materials, leading to politically desirable but empirically skewed results. In real world markets and usage scenarios an application that provides politically correct but less commonly needed results will be replaced by a better-functioning one that fits better for the actual use case.

An example would be an automated news generator that would overemphasize ‘she’ in texts that handle male-dominated professions. Statistically correctly behaving news generator would require less manual effort by the reporters to fix the grammatical errors that were introduced by the deliberate skew. At the same time, the statistically correct generator that corresponds to the pragmatic needs of everyday reporting work leads to the strengthening of existing social biases. In this way, statistically better results providing AI application works better in pragmatic usage situations but at the same time it can continue to reinforce the existing biases that might be undesirable for some parties.

Another dilemma is related to predicting the future. It is widely recognized that predicting the future based solely on historical data is impossible. The more complex phenomena and the longer timeframe, the harder it will be to predict its future state. AI systems that rely solely on historical data cannot predict future events when conditions change significantly like we witnessed during the Covid pandemic. Due to incomplete and outdated data, AI’s conclusions became incorrect worldwide.

Predictions are a wicked problem because the world is so complex that not all variables and associations can be taken into account. In addition, training and operational data samples are never perfect, and therefore reasoning paths learned by AI may not align fully with reality. Due to absence of vital but hidden variables and inaccurate data, AI’s conclusions can turn out to be incorrect and its reasoning path completely incomprehensible when we encounter a new situation that triggers previously unknown latent reasoning path. As a result, machines may produce extremely surprising results when historical patterns and situations change significantly from the previous routines.

The strengths of artificial intelligence will surprise us in the future

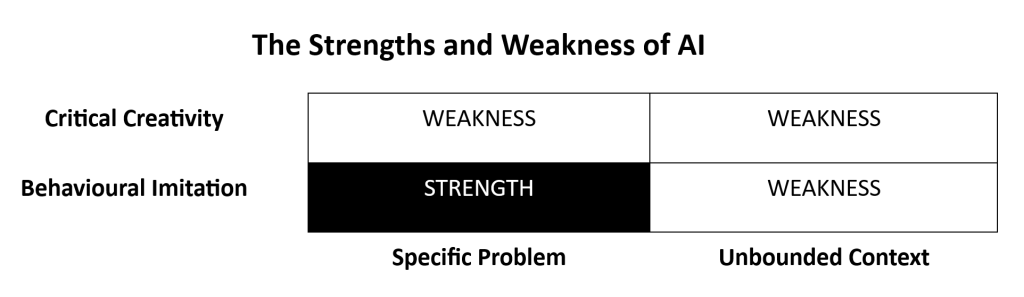

Currently, machines are learning to imitate humans in increasingly challenging tasks, doing things faster, better, and cheaper than humans. However, the strengths of artificial intelligence are limited to imitating given solutions to problems within a specific area – progress elsewhere has been very weak, if not non-existent (Figure 1). Current AIs cannot question their teacher, change the formulation of a question independently, or think beyond the limits given to them.

Figure 1: The strengths of artificial intelligence are limited to imitating solutions to specific problems within a bounded area. It still lacks genuine creativity and understanding in unbounded contexts.

However, in the future, artificial intelligence will surprise us by showing us how many presumably human things are imitative and can be imitated within its own bounded box. Tasks that seemed to require creative and wide-ranging human expertise may turn out to be more routine than we have traditionally thought them to be. They turn out to be tasks suitable for artificial intelligence, that can perform them more consistently and effectively than humans!

One such area that will turn to the advantage of machines in the future, for the aforementioned reason, is nursing – the cradle of empathy and humanity. We will be surprised by how effectively emotions and social interaction can be imitated. At the time of original presentation and the article, there was not yet any clear evidence for this. However, just recently an academic study found for the first time, according to the authors knowledge, that AI-based chatbot responses were considered more empathetic than human physician responses triggering an interest to translate and republish this article [3].

An AI system that interacts with a patient is not in a bad mood due to a family conflict or a conflict encountered at work. It does not tire due to a poorly slept night. It can face another person and adapt to their feelings. AI does not need genuine emotions. It learns to imitate gestures and social cues better than individual humans – it takes into account the individual needs of all parties by gesturing, touching, and discussing in the necessary way in all situations.

Another factor that will surprise people in the future is that the areas of use for artificial intelligence will significantly expand into larger systems than currently thought. Tasks that were supposed to require broad creative thinking will turn out to be automatable mass operations.

This applies especially to autonomous devices. AIs weaknesses that are related to the unexpected behaviour in unlimited contexts and to the lack of critical creativity can be minimized when, instead of unpredictable people, machines communicate with each other in limited contexts, solving routine situations. In this kind of machine-to-machine contexts we will see a lot of surprises.

The Role of Humans

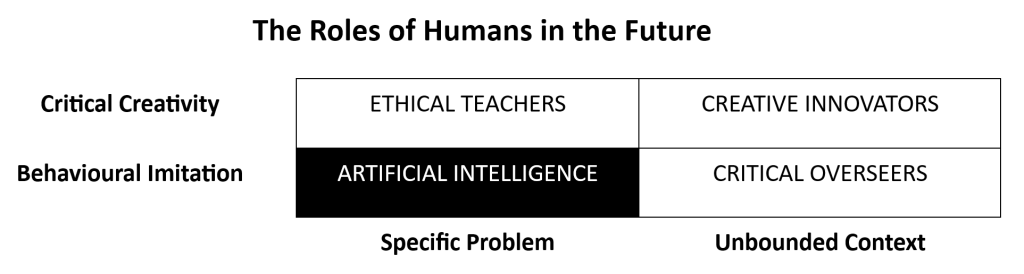

For artificial intelligence to be able to truly evolve further, it needs humans (Figure 2).

Figure 2: Humans are needed to innovate, educate, and oversee AI systems that are increasingly widely deployed for new purposes.

In the future, the role of humans is to be a creative innovators who questions the existing ways of working and normative objectives. Therefore, humans are needed for formulating new types of questions and prioritizing objectives rather than executing the reasoning paths or performing routine tasks.

Humans will be also needed to design new application areas and ways of using AI for specific problems. We are needed to teach AI to operate ethically and intelligently regardless of whatever problem they are working with to avoid potential negative consequences of failures, biases, and frauds.

Humans also play a vital role as critical quality controllers who oversee, control, and regulate AI training practices, reasoning logic, and decision outcomes on a larger scale beyond just a single problem. While predefined quality control in a closed system can be automated, and machines can monitor machines, humans are necessary to detect unknown unknowns in an open system. That is because even the smartest machines become unpredictable when exposed openly to previously unknown and uncontrollable environmental influences. Thus, human oversight is crucial in ensuring that AI operates safely and effectively in unbounded and dynamic contexts.

Turing’s and Polanyi’s ideas seem to be coming true through increasingly common and everyday examples. Both were partially correct. The machines Turing envisioned are indeed learning to imitate humans in increasingly challenging tasks, doing specific tasks faster, better, and cheaper than humans. But in terms of Polanyi’s emphasis on personalized consciousness, goal setting, and emotionality, machines have not really progressed anywhere. Conscious machines that question their teachers according to their own wills are still like fairy tale characters such as time machines and perpetual motion machines.

Author

Sami Laine has been working for 20 years as a data management practitioner, consultant, trainer, and researcher across several industries. His specialty is business-oriented data quality management from strategy to practical implementation. As the vice president of DAMA Finland ry, he promotes awareness and dissemination of good practices in data management as part of the global DAMA International community and the national Finnish Information Processing Association – TIVIA ry community.

Comment

This article is based on a presentation “Artificial Intelligence: Challenges and Opportunities” that was presented by Sami Laine, DAMA Finland ry in ‘Artificial Intelligence in the Workplace Community’ seminar organized by Sytyke ry in year 2018 on a cruise ship on the way from Helsinki, Finland to Stockholm, Sweden. A partial version of the presentation was published as an article (link) in a Finnish language Sytyke-magazine at the same year and this article is a translated version of the original presentation and the article.

References

[1] Athalye, A., Engstrom, L., Ilyas, A., Kwok, K. (2018) “Synthesizing Robust Adversarial Examples”, ICML 2018. Online video: https://www.youtube.com/watch?v=piYnd_wYlT8

[2] NTSB (2020) “Collision Between Vehicle Controlled by Developmental Automated Driving System and Pedestrian, Tempe, Arizona, March 18, 2018”, National Transportation Safety Board, Washington DC, United States.

[3] Ayers JW, Poliak A, Dredze M, et al. (2023) “Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum”, JAMA Intern Med. Published online April 28, 2023. doi:10.1001/jamainternmed.2023.1838